Database Systems: Core Concepts and Fundamentals

Database concepts define how information is stored, organized, and processed in digital systems. Databases provide structured data storage, controlled access, and reliable retrieval for applications, services, and analytical platforms.

Modern data systems rely on scalable databases such as the Exasol Analytics Engine for high-performance data storage and querying.

This page explains the fundamentals of databases, including basic structures, core components, and operational principles. It covers how data is modeled, how database systems manage storage and access, and how information flows through database architectures.

The structure and scope of these foundational concepts align with academic frameworks used in database systems education and curriculum research. The goal is to provide a clear conceptual framework for understanding databases as technical systems, from core structures to modern platform architectures.

What Is a Database?

A database is a structured system for storing, organizing, and managing data. It provides persistent storage, controlled access, and consistent retrieval of information for software applications and digital services.

Databases separate data from application logic. This separation allows multiple systems and users to access the same data while maintaining consistency, integrity, and security. Data is stored in defined structures that support efficient querying, updates, and long-term persistence.

Test Real Analytical Workloads with Exasol Personal

Unlimited data. Full analytics performance.

Modern databases operate as core infrastructure components. They support transactional systems, analytical systems, and data platforms by providing reliable data storage, structured access models, and standardized interfaces for data processing.

Database Fundamentals

Database fundamentals describe the core principles that govern how data is stored, organized, and accessed. At the most basic level, databases provide persistent storage that remains available beyond application runtime and system sessions.

Data is organized into defined structures that support consistency and reliability. These structures enable controlled access, predictable retrieval, and systematic modification of stored information. Databases enforce rules that preserve data integrity, prevent corruption, and maintain stable system behavior under concurrent use.

Fundamental database design also separates storage from processing. Storage layers manage persistence and structure, while processing layers handle queries, updates, and data access logic. This separation allows databases to scale, support multiple users, and operate as shared infrastructure across applications and systems.

Basic Database Structure

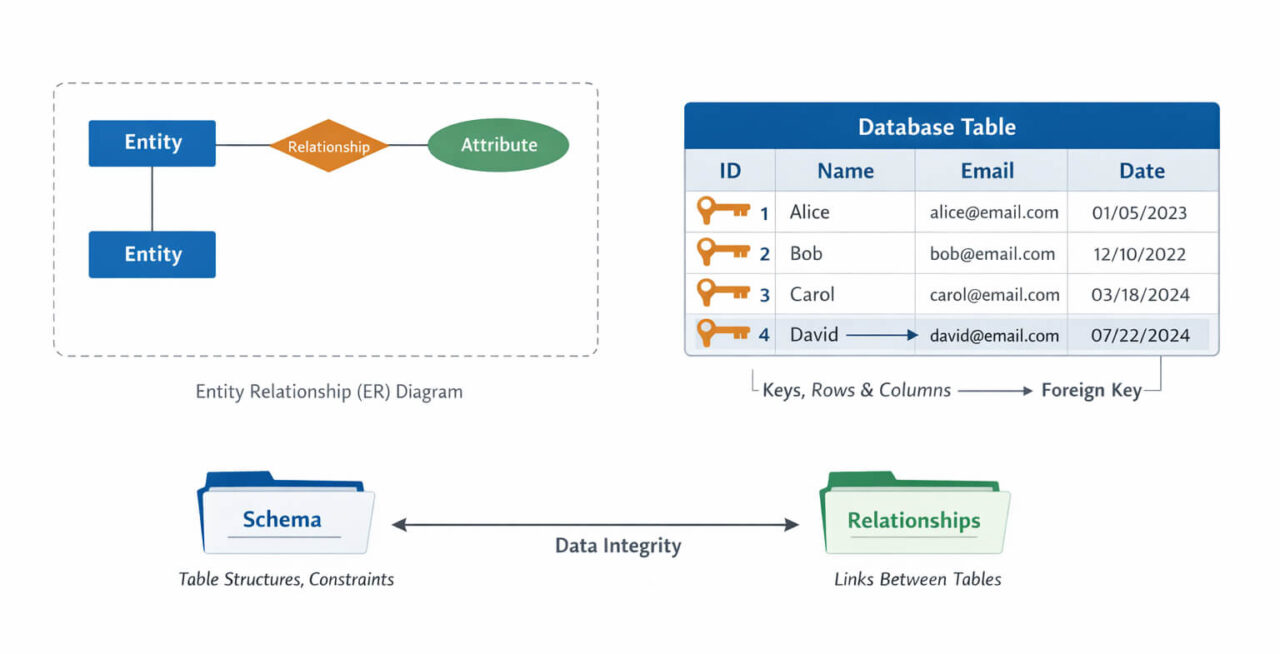

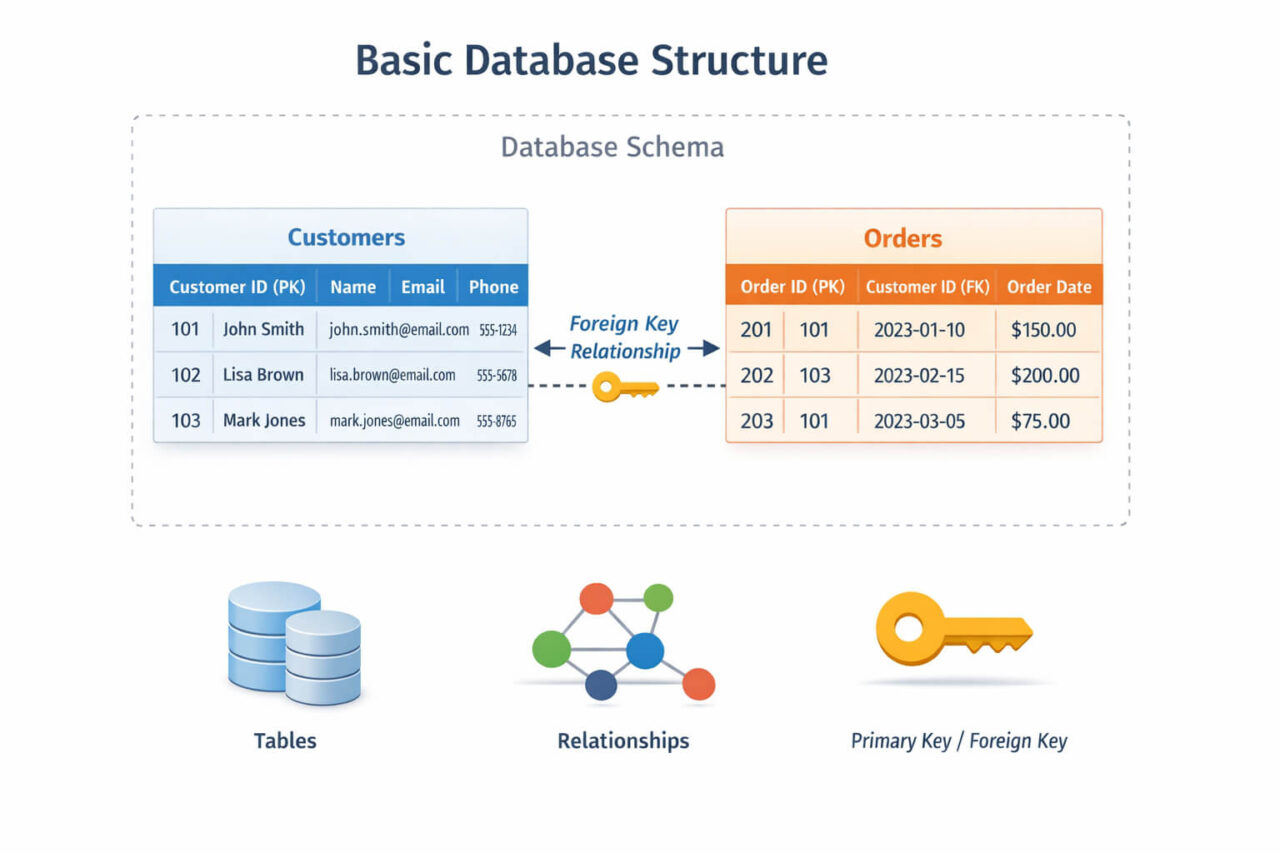

A basic database structure defines how data is organized and related within a system. These structures provide the foundation for storing, retrieving, and managing information in a consistent and predictable way.

Tables store data in organized formats. Each table represents a specific data entity, such as users, transactions, or products.

Rows (records) represent individual entries within a table. Each row contains a complete set of values for a single data item.

Columns (fields) define the attributes of the data. Each column stores a specific type of information, such as names, identifiers, or timestamps.

A schema defines the structural blueprint of the database. It specifies how tables are organized, how data types are defined, and how entities relate to each other. Schemas provide structure, consistency, and governance across the database.

Relationships connect data across tables. These relationships define how entities interact and allow data to be combined across different structures.

Primary keys uniquely identify each record in a table.

Foreign keys create references between tables and enforce relational integrity.

Indexes improve data retrieval performance. They create optimized lookup structures that allow database systems to locate records efficiently without scanning entire tables.

The organization of data into structured tables, relationships, and constraints follows established relational data paradigms described in academic research.

Together, these components form the structural foundation of a database system and define how data is stored, linked, and accessed.

DBMS (Database Management Systems)

A DBMS (Database Management System) is the software layer that controls how data is stored, accessed, and managed within a database. It acts as the interface between applications and the underlying data structures.

The DBMS manages core operational functions, including data storage, query execution, access control, and transaction handling. It enforces rules that maintain data integrity, consistency, and reliability across multiple users and systems.

Database management systems also control concurrency and isolation. They coordinate simultaneous operations, prevent conflicts between transactions, and ensure stable system behavior under parallel workloads. Through these mechanisms, the DBMS provides a controlled environment for secure and reliable data management.

Test Real Analytical Workloads with Exasol Personal

Unlimited data. Full analytics performance.

SQL and Data Access

Databases expose data through standardized access layers that allow applications and systems to retrieve and modify stored information. Query languages provide a structured interface for interacting with database systems without direct access to storage structures.

SQL (Structured Query Language) represents the most widely used model for data access in relational databases. It defines how data is selected, inserted, updated, and managed through declarative queries that describe what data is required rather than how it is physically retrieved.

At a system level, data access operates as an abstraction layer. Applications interact with logical data models, while the database system translates these requests into storage operations, execution plans, and retrieval processes. This separation enables consistency, security, and controlled access across users and systems.

Hybrid access patterns and abstraction models are explored in virtual schema models for hybrid data access.

Core Database Concepts

Core database concepts define how data systems maintain consistency, reliability, and predictable behavior during operation. These principles govern how data is modeled, processed, and protected within database environments.

Data modeling defines how information is structured to represent real-world entities and relationships. It establishes logical representations that support consistency and scalable system design.

Normalization organizes data to reduce redundancy and maintain structural integrity across related entities.

Transactions group operations into controlled execution units. They ensure that data changes occur in a consistent and reliable manner. These transactional guarantees are defined as ACID properties in relational systems, which describe atomicity, consistency, isolation, and durability.

Consistency enforces valid data states.

Isolation separates concurrent operations to prevent interference.

Durability ensures that committed data changes persist beyond system failures.

Query processing controls how requests are interpreted and executed by the database system. Logical queries are transformed into execution plans that determine how data is accessed, combined, and returned. Execution models coordinate storage access, computation, and resource allocation to maintain stable performance and predictable behavior.

Database Architecture Basics

Database architecture defines how storage, processing, and control components are organized within a data system. It separates responsibilities across specialized layers to maintain reliability, scalability, and operational stability.

- Storage engines manage how data is physically stored, organized, and persisted. They control file structures, memory management, indexing mechanisms, and data layout strategies.

- Processing engines handle query execution, computation, and data transformation. They manage how operations are performed on stored data.

- Execution layers coordinate query planning, scheduling, and resource allocation.

- Control layers manage transactions, concurrency, access control, and system coordination.

This layered architecture enables databases to scale, support parallel workloads, and operate as shared infrastructure components across multiple applications and services.

Test Real Analytical Workloads with Exasol Personal

Unlimited data. Full analytics performance.

Storage and Processing Models

Database systems use different models to manage how data is stored and processed. These models influence performance characteristics, access patterns, and system scalability.

Row-based storage organizes data by records, storing complete rows together. This model supports transactional workloads that require frequent inserts, updates, and point lookups.

Column-based storage organizes data by attributes, storing values from the same column together. This model supports analytical workloads that process large data volumes across many records.

In-memory processing keeps active data in memory to reduce access latency and improve execution speed.

Disk-based processing relies on persistent storage for long-term data retention and durability.

Caching models reduce access latency by storing frequently used data in faster storage layers. These models balance performance, cost, and persistence across storage hierarchies.

Scalability and Concurrency

Scalability and concurrency define how database systems handle growth and parallel usage. These capabilities determine whether a system can support increasing data volumes, user activity, and workload complexity.

Horizontal scaling distributes data and processing across multiple nodes.

Vertical scaling increases capacity within a single system through additional resources.

Parallel processing executes operations simultaneously to improve throughput and reduce latency.

Multi-user concurrency allows multiple users and applications to access and modify data at the same time. Database systems coordinate concurrent operations to prevent conflicts, preserve consistency, and maintain stable performance under parallel workloads.

Performance evaluation in such environments requires careful methodology, as system behavior under concurrency and scale can distort results. These challenges are analyzed with practical approaches to testing described in reproducible database benchmarking.

Distributed Databases

Distributed databases store and process data across multiple physical or logical systems. This architecture improves availability, resilience, and scalability.

Replication creates multiple copies of data to improve reliability and fault tolerance.

Partitioning divides data into separate segments to distribute storage and processing.

Sharding assigns partitions to different nodes to scale horizontally across systems.

Consistency models define how data synchronization is managed across distributed components.

Fault tolerance mechanisms ensure continued operation during system failures and infrastructure disruptions.

Transactional vs Analytical Systems

Database systems are designed for different workload types.

Transactional systems (OLTP) handle high-frequency, low-latency operations such as inserts, updates, and point queries. They prioritize consistency, reliability, and rapid response times.

Analytical systems (OLAP) process large data volumes for reporting, aggregation, and analysis. They prioritize throughput, parallel processing, and efficient data scanning.

These system types differ in architecture, storage models, and execution strategies, reflecting their distinct operational requirements.

Modern Data Platforms

Modern data platforms integrate databases into broader data ecosystems. They combine storage systems, processing engines, analytics layers, and integration services into unified architectures.

Cloud-native databases operate on distributed infrastructure and elastic resource models.

Data platforms coordinate data ingestion, storage, processing, and access across systems.

Analytics engines optimize large-scale data processing for analytical workloads. These platforms form the foundation of modern data architectures, supporting scalable, distributed, and multi-system data environments.

Test Real Analytical Workloads with Exasol Personal

Unlimited data. Full analytics performance.

Summary

Database systems provide the structural foundation for modern digital infrastructure. They define how data is stored, organized, accessed, and processed across applications, platforms, and services.

The core concepts of databases, including structure, storage models, processing layers, transactions, and architecture, form a consistent framework that applies across different technologies and system designs. From basic database structures to distributed platforms and analytical systems, these principles remain central to how data systems operate.

FAQs

The four commonly recognized database types are:

Relational databases – store data in structured tables with defined relationships.

NoSQL databases – store semi-structured or unstructured data using non-tabular models (e.g., key-value, document, graph).

Hierarchical databases – organize data in tree-like parent–child structures.

Network databases – organize data using interconnected records with multiple relationships.

The five main components of a database system are:

Data – the stored information.

Schema – the structural definition of the data.

DBMS – the software that manages storage, access, and control.

Query language – the interface for accessing and modifying data (e.g., SQL).

Users and applications – systems and users that interact with the database.

The standard phases of database design are:

Requirements analysis

Conceptual design

Logical design

Schema design

Physical design

Implementation

Testing and validation

Core SQL database concepts include:

Tables and schemas

Rows and columns

Primary and foreign keys

Relationships

Indexes

Transactions

Constraints

Queries

Views

Stored procedures