Streaming Analytics Made Simple with the Exasol Kafka Connector

In today’s data-driven world, businesses are no longer satisfied with insights that arrives hours—or even minutes—too late. Real-time data streams from applications, IoT devices, and event-driven systems demand analytics platforms that can keep up. This is where the Exasol Kafka Connector comes in, bridging the gap between high-throughput streaming data and ultra-fast analytics.

Why Kafka + Exasol?

Apache Kafka has become the de facto standard for event streaming. It reliably handles massive volumes of data, decouples producers and consumers, and enables real-time architecture. Exasol is a high-performance database built for blazing-fast analytics with minimal operational overhead.

Together, Kafka and Exasol allow organizations to:

- Ingest streaming data continuously into an analytics-ready database.

- Run complex analytical queries on fresh data with sub-second response times.

- Simplify data architecture by reducing intermediate storage and batch jobs.

The Exasol Kafka Connector makes this integration seamless.

What Is the Exasol Kafka Connector?

The Exasol Kafka Connector is a Kafka Connect–compatible Exasol extension that enables reliable, scalable, and near–real-time ingestion of Kafka topics directly into Exasol. It is designed for production-grade workloads and is deployed as a connector extension using BucketFS.

Key features:

- Kafka Connect compatible – integrates cleanly with Kafka Connect–based architectures

- High-throughput ingestion – leverages Exasol’s parallel loading capabilities

- Multiple data formats – supports JSON, Avro, and String record formats out of the box

- Operational flexibility – works with onPrem deployments and Exasol SaaS.

Fault-tolerant ingestion – relies on Kafka offset management for reliable delivery

How It Works

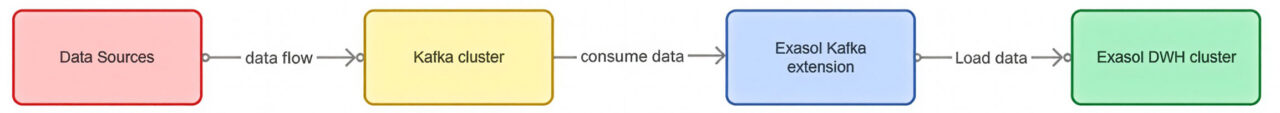

At a high level, the connector continuously reads records from Kafka topics and imports them into the Exasol cluster using the Kafka Connector Extension.

- Kafka producers publish events (commonly JSON or Avro) to Kafka topics.

- Kafka Connect orchestrates connector tasks and manages scaling and offsets.

- The Exasol Kafka Connector Extension runs inside Exasol and reads from Kafka, loading data via high-performance parallel imports.

- Incoming data is often stored first in raw or staging tables (for example as JSON documents), enabling schema flexibility.

- Downstream transformations are performed inside Exasol using SQL or tools like dbt, following an ELT pattern.

This approach enables near–real-time analytics while keeping ingestion pipelines simple and resilient.

Common Use Cases

The Exasol Kafka Connector unlocks a wide range of real-time and near–real-time analytics scenarios:

Real-Time Business Dashboards

By ingesting events such as user interactions, transactions, or application logs in near real time, business teams gain up-to-the-minute visibility into operations. This enables faster decision-making, quicker reaction to changing customer behavior, and more confident executive reporting based on current – not stale – data.

Fraud Detection and Risk Analysis

Streaming transaction data directly into Exasol allows organizations to identify risks earlier, reducing financial losses and reputational damage. Analysts can combine live data with historical context to spot anomalies faster, shorten investigation cycles, and act before issues escalate.

Operational Monitoring

Centralizing system and application events in Exasol provides a single source of truth for operational intelligence. Teams can proactively detect performance bottlenecks, reduce downtime, and improve service reliability – directly impacting customer satisfaction and operational costs.

Benefits for Data Teams

With the Exasol Kafka Connector, data engineers and analysts gain:

- Reduced complexity – fewer custom ingestion pipelines to build and maintain

- Faster time to insight – analytics on fresh data, not yesterday’s snapshots

- Operational confidence – built-in fault tolerance and offset management

- Future-proof architecture – supports modern, event-driven data platforms

Getting Started

Getting started with the Exasol Kafka Connector typically involves the following steps:

- Prepare Kafka

- Run a Kafka cluster (often via Docker Compose for demos, or a managed Kafka service in production).

- Deploy the Connector Extension

- For Docker-based Exasol: upload the connector JAR to BucketFS.

- For Exasol SaaS: upload the connector JAR via the SaaS UI.

- Configure the Connector

- Define Kafka bootstrap servers, topics, record formats (JSON, Avro, String), and target tables.

- Create Target Tables

- Many teams ingest data into raw tables first to allow for evolving schemas.

- Transform and Analyze

- Use SQL, dbt, or orchestration tools like Apache Airflow to transform streaming data into analytics-ready models.

Once configured, data flows continuously from Kafka into Exasol and is immediately available for analytical queries.

Detailed documentation and examples are available here.

Conclusion

Real-time data deserves real-time analytics. The Exasol Kafka Connector enables organizations to combine the power of Apache Kafka’s event streaming with Exasol’s high-performance analytics—without complexity.

Whether you are building real-time dashboards, detecting fraud, or analyzing streaming IoT data, the Exasol Kafka Connector helps you turn events into insights, faster.

Ready to stream data into Exasol? Explore the Exasol Kafka Connector and start analyzing your data as it happens.