Announcing Exasol Integration with MLflow: Streamlining AI & ML Workflows

We’re excited to announce a new Exasol + MLflow integration prototype that brings together Exasol’s high-performance analytics engine with MLflow’s open-source MLOps framework. This integration makes it easier for data science teams to manage, deploy, and monitor models directly within their Exasol-powered workflows.

What is Exasol?

Exasol is the world’s fastest analytics engine, purpose-built for performance, scalability, and concurrency. It enables teams to run advanced analytics, in-database machine learning (via UDFs), and AI-driven workloads with unmatched speed.

What is MLflow?

MLflow is the leading open-source platform for the machine learning lifecycle. It includes:

- Experiment Tracking – logging parameters, metrics, and results from training runs.

- Model Registry – versioning, organizing, and deploying models.

- Artifact Repository – storing dependencies and environment definitions.

- Inference Server – serving models for predictions.

By integrating MLflow with Exasol, we bridge the gap between data engineering and model operations.

Why This Integration Matters

AI workflows often stall when teams move from experimentation to production. Data sits in one system, while models live in another. Exasol + MLflow addresses this by unifying model management and inference directly within the database layer.

Key benefits include:

- Direct Model Access from SQL – Exasol UDFs can gather model properties and call models registered in MLflow, so predictions run directly where your data lives.

- Support for Large Models – Successfully tested with LLMs up to 4GB, including dynamic model loading from Hugging Face or OpenAI.

- Flexible Deployment – MLflow server can run anywhere, but colocating it with Exasol nodes provides the lowest latency and allows for parallel execution.

- Custom Authentication – Token-based authentication ensures secure, granular access to models.

- Comprehensive Metadata – Model inputs, outputs, dependencies, and artifacts are captured by MLflow. This metadata unlocks drift detection and retraining triggers.

How It Works

Open API Interface

The integration is API-driven, with a lightweight OpenAPI GUI automatically generated by the FastAPI server. This makes endpoints self-documented and easy to test.

Model Loading and Inference

- Models can be preloaded or dynamically added without restarting MLflow.

- Each model is identified by a tag in the configuration file.

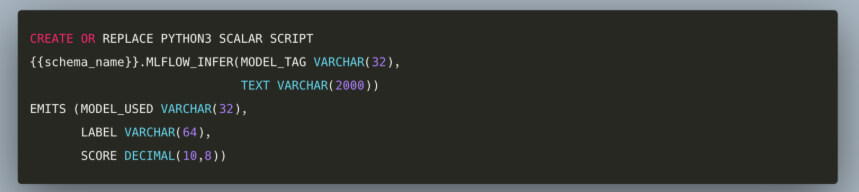

- UDFs in Exasol use an AI client to call MLflow’s inference endpoints, passing data frames directly.

Hosting Architecture

- One MLflow instance per node is the simplest configuration, ensuring local execution and parallelism.

- Servers share the same configuration file, so models load consistently across nodes.

- Load balancing is possible across multiple instances can allow for scalable deployments.

Resource Considerations

- Since Exasol and MLflow share machine resources, memory allocation is crucial:

- Command-line parameters allow limiting memory and parallel requests per MLflow instance.

- For production deployments, Exasol recommends reserving explicit portions of RAM for database and MLflow separately to prevent crashes when loading large models.

- GPU support is possible when running Python-based models.

Why It Matters for AI Teams

This integration gives technical teams a direct path from data to production inference:

- Reduced friction – no need to move models and data between disconnected systems.

- Scalable experimentation – MLflow’s model registry and artifact store work seamlessly with Exasol’s UDF execution.

- Better governance – token-based auth and model metadata provide security and reproducibility.

- Faster iteration – dynamically load new models, test in-database, and scale across nodes.

Together, Exasol and MLflow help teams operationalize AI faster, with less overhead, and with the confidence that their data and models are working in sync.

The result is data scientists and analysts can easily engage with production AI & ML models within their SQL workflows. Your organization can ensure that these models are managed, governed and fresh.

What’s Next

This prototype is available under Exasol Labs, meaning it’s open for exploration but not yet fully productized. This is your opportunity to engage with the prototype and share what future enhancements would best benefit your organization, including:

- Adding MLflow’s tracking server to capture experiment logs and usage statistics.

- Aligning with MLflow’s token-based authentication standard.

- Publishing deployment resource guides to support production-grade workloads.

Closing Thoughts

Exasol has always been about speed and performance at scale. By integrating with MLflow, we’re extending that vision into the heart of the AI lifecycle—where models meet data.

Whether you’re running classical ML models or testing the latest large language models, this integration ensures that your data workflows and your model workflows finally work as one.

👉 Keep an eye on our Exasol Labs GitHub for documentation and deployment examples