Modern Data Warehouse: Definition, Architecture and Best Practices

When monthly reporting grinds to a halt or query wait times stretch into hours, it’s not the analytics team that’s the problem – it’s the data infrastructure. A modern data warehouse eliminates those bottlenecks, scaling on demand, adapting to new data sources, and delivering insights without sacrificing governance or cost control.

The strongest designs connect directly to both cloud and hybrid environments, handle real-time analytics alongside machine learning, and keep performance steady even as workloads grow. The result: faster answers, the flexibility to pivot, and a platform that can evolve with your business instead of holding it back.

This guide explains what makes a data warehouse “modern,” outlines its key characteristics, provides a high-level look at its architecture, and shares best practices for building a data warehouse that’s future-proof.

Try a Modern Data Warehouse for Free

Run Exasol locally and test real workloads at full speed.

What Is a Modern Data Warehouse?

A modern data warehouse is a centralized platform for storing, processing, and analyzing structured and semi-structured data at scale. It handles structured and semi-structured formats, pulls from SaaS apps, transactional systems, and streaming feeds, and delivers results near real-time, guided by robust data warehouse models

to balance flexibility, performance, and schema clarity.”

While many enterprise data warehouses now include modern capabilities, the term “modern” typically refers to designs that emphasize flexibility, integration, and support for advanced analytics.

They can run in the cloud, on-premises, or in hybrid environments, making it easier for organizations to match their technology to business, compliance, and budget requirements.

Modern data warehouses typically offer:

- Support for multiple data formats – including structured, semi-structured (JSON, Avro, Parquet), and sometimes unstructured data.

- Integration with modern data pipelines – enabling both batch and streaming ingestion.

- Built-in governance and security features – such as role-based access control, encryption, and auditing – are core to what modern platforms provide, reflecting the advantages of a data warehouse in regulated and high‑stakes environments.

These capabilities allow teams to handle high-volume workloads, support advanced analytics and machine learning, and respond quickly to changing business needs.

A modern data warehouse isn’t just a faster database — it’s an adaptable ecosystem designed to handle diverse data types, massive scale, and evolving business demands without costly redesigns.

Florian Wenzel, VP of Product

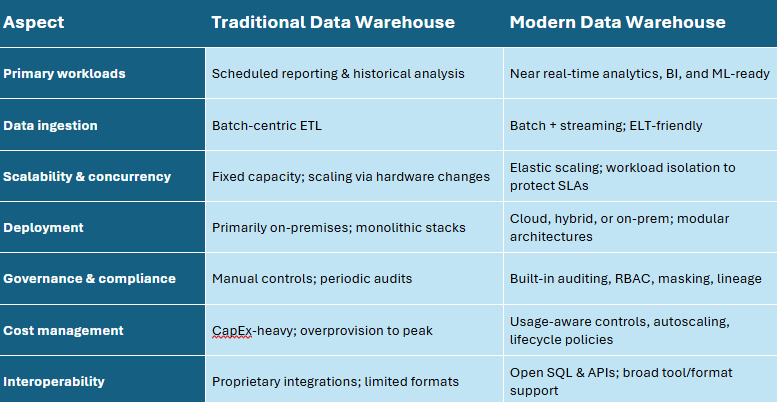

Key Characteristics of a Modern Data Warehouse

A single technology doesn’t define it; it’s a combination of data warehouse concepts, design principles, and capabilities that make it flexible, scalable, and ready for today’s analytics demands.

Support for ELT, Streaming, and Batch Processing

Handles ingestion through batch loads, near real-time streaming, or ELT pipelines — so analytics stay current regardless of how or when the data arrives.

Scalability and Concurrency

Elastic scaling ensures the platform can handle increasing volumes of data and higher numbers of concurrent users without slowing query response time.

Built-In Governance and Security

Modern platforms include capabilities like role-based access control (RBAC), encryption at rest and in transit, and detailed audit logs to meet compliance requirements. Security controls include access management, encryption, and audit logging, often implemented through identity and access management (IAM) systems, key management services, and security information and event management (SIEM) tools.

Openness and Interoperability

Works with standard SQL, APIs, and multiple data formats to integrate cleanly with BI, analytics, and data science tools.

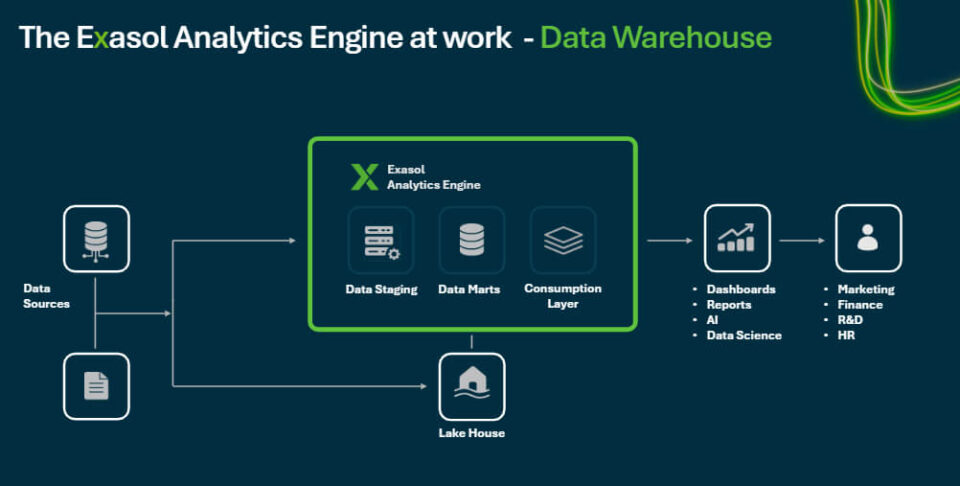

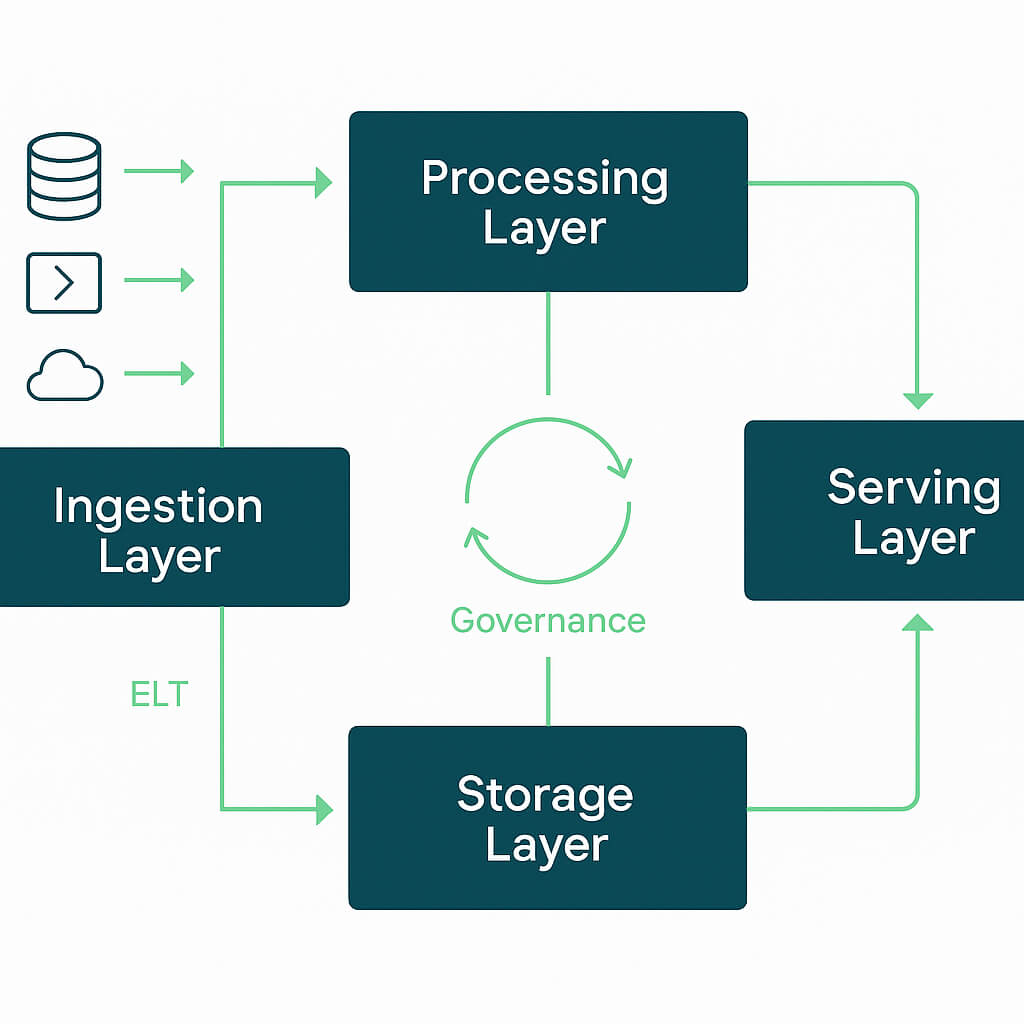

Architecture at a Glance

Modern data warehouse architecture uses a modular, flexible, and resilient design that adapts as analytics needs change, combining ingestion, processing, storage, and serving layers to support both batch and real-time workloads.

At a high level, it brings together ingestion, processing, storage, and serving layers in a way that supports both batch and real-time workloads.

Core Components

- Ingestion Layer – Collects data from transactional systems, SaaS platforms, APIs, and streaming sources, often using data integration platforms, ETL/ELT tools, and event streaming services.

- Processing Layer – Handles transformation, cleaning, and enrichment, frequently supported by data transformation frameworks, workflow orchestration tools, and SQL-based processing engines.

- Storage Layer – Stores structured and semi-structured data in relational databases, columnar storage systems, and cloud object storage, optimized for analytics workloads.

- Serving Layer – Powers BI dashboards, reports, and machine learning applications.

Deployment Models

Modern data warehouses can be deployed in:

- Cloud environments – Fully managed, elastic resources.

- Hybrid setups – Combining cloud scalability with on-premises control.

- On-premises – For organizations with strict compliance or data residency needs.

The National Institute of Standards and Technology (NIST) Big Data Interoperability Framework establishes vendor-neutral definitions and architecture components that help guide this flexibility.

For a deeper breakdown of each layer, patterns like multi-cluster MPP, and example designs, see our complete guide to data warehouse architecture.

Try a Modern Data Warehouse for Free

Run Exasol locally and test real workloads at full speed.

Migration Blueprint

Migrating to a modern data warehouse isn’t just a technical upgrade—it’s a strategic project that impacts processes, teams, and budgets.

1. Assess Your Current Environment

- Inventory all data sources, pipelines, and analytics workloads.

- Identify technical debt, performance bottlenecks, and compliance constraints.

- Define business priorities, such as real-time reporting, AI readiness, or cost control.

2. Design for Future Needs

- Select a deployment model (cloud, hybrid, on-premises) that aligns with compliance and scalability goals – many organizations maintain legacy investment or require capabilities we have expanded on in SQL data warehouse.

- Build with a modular architecture to support new tools and integration methods.

- Integrate governance, security, and cost optimization from the outset.

Strong governance frameworks help mitigate risks from both external and internal threats. The European Union Agency for Cybersecurity (ENISA) Big Data Threat Landscape identifies emerging risks in analytics environments and provides actionable best practices for large-scale data systems.

3. Pilot Before Full Rollout

- Start with a high-impact use case to validate architecture workflows.

- Test ingestion, transformation, and serving layers under realistic workloads.

- Collect feedback from stakeholders early to refine requirements.

4. Scale and Optimize

- Expand workloads in phases while maintaining data quality and meeting performance benchmarks.

- Track resource utilization and fine-tune configurations to control costs.

- Document processes for ongoing maintenance, monitoring, and improvement.

5. Common Pitfalls to Avoid

- Migrating everything at once without prioritization.

- Underestimating the time for data quality and transformation work.

- Overlooking security, compliance, and cost governance too late in the process.

Governance, Compliance & Data Sovereignty

Strong governance, embedded from the start, ensures a data warehouse meets regulatory standards, controls access, and protects sensitive information. Governance and compliance aren’t optional add-ons—they need to be designed into the architecture from the start.

Governance by Design

- Role-Based Access Control (RBAC) – Restrict data access based on roles and responsibilities.

- Data Lineage & Auditing – Track how data moves, transforms, and is used throughout the warehouse.

- Metadata Management – Maintain clear definitions, classifications, and usage guidelines.

Complying with ISO/IEC 27001 helps ensure confidentiality, integrity, and availability.

Compliance with Industry Regulations

Whether it’s GDPR, HIPAA, or CCPA, compliance measures need to be embedded directly into workflows:

- Automated masking of personally identifiable information (PII).

- Encryption of data at rest and in transit.

- Built-in audit logs to demonstrate adherence during reviews.

Data Sovereignty Considerations (2025+)

For organizations operating in multiple regions, data residency laws can affect where and how data is stored:

- Choose deployment regions that match local legal requirements.

- Use hybrid or multi-cloud architectures to segment sensitive datasets.

- Monitor regulatory changes to avoid non-compliance in the future.

By integrating governance, data sovereignty, and compliance measures into the core design, you reduce legal risk, maintain operational continuity, and keep analytics pipelines uninterrupted across regions.

Try a Modern Data Warehouse for Free

Run Exasol locally and test real workloads at full speed.

Cost & Performance Optimization

Without active cost and performance management, a modern data warehouse can quickly consume unnecessary resources and overshoot budgets.

Workload Isolation & Autoscaling

- Run analytical and operational workloads on separate compute clusters to prevent performance contention.

- Use autoscaling to handle spikes in demand without keeping excess capacity running.

Monitoring & Alerting

- Set thresholds for CPU, memory, and query response times.

- Use automated alerts to detect unusual usage patterns before they impact budgets.

Query Optimization

- Leverage indexing, clustering, and materialized views to reduce compute requirements.

- Review slow-running queries regularly to identify tuning opportunities.

With continuous monitoring and optimization, you maintain predictable costs and high performance, even as query volumes and workloads scale.

Exasol puts its performance claims to the test every night—running over 100,000 performance checks across 239 different workloads, with nearly 57,000 server hours committed to ensure consistent speed and scalability. Learn more in our blog post, Exasol performance.

Integration with Data Lakes & Lakehouses

Modern data warehouses often work alongside other data storage and processing technologies. A well-integrated architecture ensures the right workloads run on the right platform.

Data Lakes

- Ideal for storing raw, unstructured, or semi-structured data at scale.

- Complement the data warehouse by acting as a landing zone before transformation and modeling.

For a full overview, check our article Data Lake vs Data Warehouse.

Lakehouses

- Combine features of data lakes and data warehouses in a single platform.

- Useful for analytics that require both structured querying and flexible data science exploration.

Integration Considerations

- Plan clear data flows between platforms to avoid duplication.

- Use metadata management to track datasets across systems and maintain lineage.

- Monitor query performance when federating across platforms.

Supporting AI & Machine Learning Workloads

Modern analytics increasingly rely on machine learning and AI, requiring data warehouses to integrate seamlessly with machine learning frameworks, data science notebooks, and model deployment platforms.

If your data warehouse isn’t AI-ready today, you’re already behind. AI isn’t an add-on anymore — it’s a core capability, and the architecture has to support experimentation and deployment from day one.

Madeleine Corneli, Senior Product Manager AI/ML, Exasol

In-Database AI/ML Execution

Use your warehouse as a runtime environment for training and executing ML models via in-memory UDFs or SQL-native extensions.

AI Toolchain Integration

Seamlessly connect with tools like Exasol’s AI Lab, UDF framework, Transformers Extension, and generative AI workflows.

Key Benefits

Key benefits of a modern data warehouse include:

- Reduces latency by keeping compute next to data

- Simplifies deployment by eliminating external infrastructure

- Accelerates AI-driven analytics and predictive insights

Try a Modern Data Warehouse for Free

Run Exasol locally and test real workloads at full speed.

Modern Data Warehousing in Practice

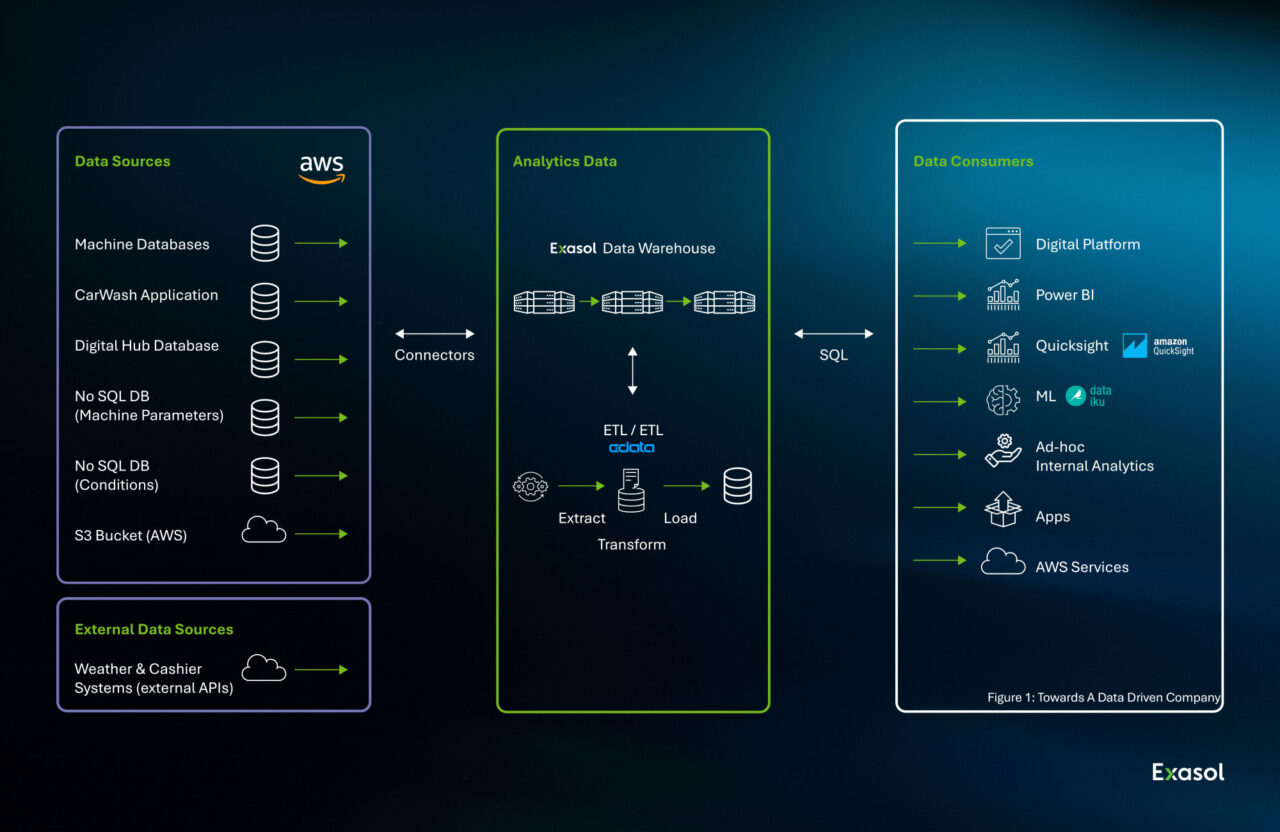

These examples show how different industries apply modern data warehouse principles to meet unique compliance, performance, and analytics demands.

Global Enterprise with Real-Time Agility

Cineplexx modernized its data infrastructure to achieve near real-time operational visibility across multiple countries. The new platform enables data-driven decision-making and personalized customer experiences, all while managing complex, multi-market operations.

Predictive Maintenance in Industrial Settings

WashTec leverages Exasol alongside machine learning tools to minimize equipment downtime, optimize resource allocation, and enhance customer satisfaction. Real-time analytics power predictive maintenance, ensuring higher operational efficiency and service quality.

Regulated Healthcare at Scale

Piedmont Healthcare replaced its legacy analytics infrastructure with a modern data warehouse that delivers faster insights across multiple hospitals. The new system supports high-performance dashboards, meets stringent compliance requirements, and lays the foundation for AI-driven innovation.

Wrapping up

A modern data warehouse that combines flexible architecture, embedded governance, and tuned performance doesn’t just support analytics, it enables real-time, compliant, and scalable decision-making across the organization.

Frequently Asked Questions

The modern data warehouse model combines high-performance querying, flexible deployment options, and support for multiple data formats, enabling real-time analytics.

The three main types are:

1. Enterprise Data Warehouse (EDW) – Centralized, organization-wide repository.

2. Operational Data Store (ODS) – Focused on near real-time operational reporting.

3. Data Mart – Subject-specific subset of data for specific teams or functions.

Key trends include lakehouse integration, real-time analytics, AI/ML-powered query optimization, and multi-cloud or hybrid deployments to meet data sovereignty requirements.

Its main function is to store, organize, and process data so it’s ready for analytics, reporting, and machine learning. It serves as a single source of truth for structured and semi-structured data.

Start with a clear understanding of your data sources, governance requirements, and performance goals. Choose an architecture that supports elastic scaling, cost management, and security from the outset.