Cloud computing is everywhere. You read about it in the news; your colleagues are constantly talking about and it could be worse: customers and colleagues might ask you for advice.

They might even look to you to provide them with information that enables them to decide. Even worse, you might be faced with having to prepare a formal proposal about your company’s cloud strategy in a structured and comprehensible way.

Is the cloud the wrong choice for your organization or is it in fact an option you can leverage to gain competitive advantage while streamlining your enterprise architecture? These key questions will be addressed in our blog mini-series where we provide you with general facts and information to justify your position.

Yes, you heard me right: Moving to the cloud can sometimes be the wrong decision. There are still good reasons to stick to the server rack in your basement.

Within the four articles of this blog series, I will cover the following topics:

Part 1

1. Maximum performance

2. Consistent performance

Part 2

3. Availability

4. Configuration & deployment agility

Part 3

5. Upfront investment (CapEx)

6. Contractual flexibility

7. Internal know-how/resources/staff

8. Cost for continuous usage vs. cost of spot usage

Part 4

9. Enterprise cloud strategy & hybrid approaches

10. Summary

As cloud vs. on-premise isn’t just a black-or-white discussion, I will discuss these topics by highlighting the following Cloud options in more detail and comparing them to on-premise:

- Unmanaged Fully Virtualized Cloud

Although cloud providers like AWS and MS Azure offer some kind of managed services (e.g. Database as a Service), it’s quite common to just “move” your applications onto a virtualized server in the cloud. Here, you typically have to manage such servers yourself. When we refer to this category, we therefore just focus on the Infrastructure as a Service facet of the offerings. - Unmanaged Bare Metal Cloud

If you don’t like to share, a bare metal cloud like BigStep, IBM Softlayer or Rackspace Bare Metal might be a viable alternative solution. As the name implies, a bare metal cloud runs your software exclusively on a single physical machine without significant virtualization infrastructure in between. - Managed Bare Metal Cloud / Hosting

If you don’t want to manage and operate your applications and infrastructure components within your company, a managed offering may be the right approach, e.g. EXACloud, Exasol’s premium hosting service runs and operates high-performance in-memory database systems on bare metal supported by Exasol operations experts.

So let’s start to dig deeper:

Maximum performance

There are many applications where maximum performance is not really crucial, e.g. web servers, a CRM system or an intranet information management solution. In such cases you don’t have to worry about the performance overhead you have to pay for virtualized solutions. The performance you get is “sufficient” and throughput can be simply increased by adding additional servers.

But there are important use cases where performance rules.

If you need KPIs about previous day operations by 6am and these KPIs have to be derived from 100s of TBs in order to adjust your production, the number crunching jobs have to finish between 0:00 and 6:00am, otherwise production and supplier management can’t be adjusted and your company risks losing money.

Or even more obviously: If your system has to deliver and display the right ad to the user who visits a website within 100ms (during page loading), the space on the page will remain blank when it takes 110ms to finish the computation.

Maximum performance is crucial here.

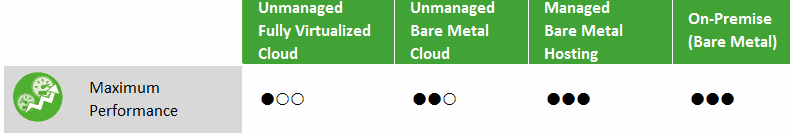

In general, virtualized cloud offerings can‘t deliver maximum performance due to an inherent virtualization overhead and shared resources. As shown by the examples above, if you are dealing with time constrained and business critical computations or analysis, maximum performance is crucially important.

In general, the inherent technical virtualization overhead and complexity negatively influences the following areas:

- network performance

- compute instance performance

- shared storage performance

Maximum performance summary

Managed hosting and on-premise deliver the best performance as these options typically use optimized hardware without virtualization.

Unmanaged Bare Metal Cloud offerings are typically slightly slower as the general purpose hardware and infrastructure is not optimized for application specific scenarios.

Consistent performance

There is a second, perhaps even more important performance-related dimension: Consistent performance.

This aspect is often referred to as the noisy neighbor effect: It addresses concerns about sharing resources with other customers. These (noisy) neighbors might have a negative impact on the performance of your application in particular during peak usage period of your “neighbor”.

This concern is not new at all. It has been a factor since shared resources have been part of any IT infrastructure (network, SAN etc.). Of course these issues are more significant in the face of the multi-tenant nature of cloud computing as everything is shared and as you don’t even know your “noisy” neighbor and their usage patterns.

But again the impact is use case specific.

Assuming you have 100 web servers in the cloud and just one of them currently needs about 260ms to deliver the web page instead of 200ms, the processing time required by the remaining 99 web servers. In this case 1% of the users might wait 60ms longer that all other users. Effectively you just lose performance for the one user group on this one web server or more precisely 0.3% of your overall computing power. Obviously that’s completely negligible.

In the context of massively parallel (MPP) data management and computing solutions like massively parallel databases, this might be a totally different story.

In general, the response time of MPP data management systems that distribute data over all nodes relies on the slowest node in the cluster (that is the node that returns the last result fragment).

In shared environments, performance fluctuations affecting single nodes are not uncommon due to peak loads and temporary bottlenecks. As a consequence, with a growing number of nodes MMP systems may increasingly suffer from such performance fluctuations in shared environments.

Let’s assume your MPP database runs on 100 virtualized nodes and one single node needs 13 instead of 10 hours to complete its part of the KPI computation that is needed to steer your production process. As the final result is not available before the slowest node finishes its part, you will get your result after 13 instead of 10 hours.

This might be too late to use for adjusting your production process. Furthermore in the end you lose 30% of the performance you paid for as you pay for a 10-hour response time system, but it required13 hours to return results. Remember that in the similar web server example (one web server needed 30% more time to respond than the remaining 99 ones) you just lost 0.3%.

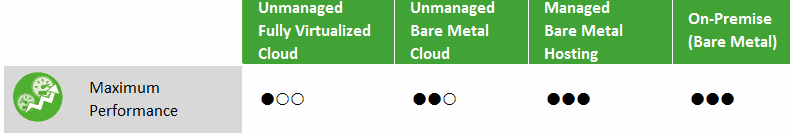

In non-virtualized environments, such performance fluctuations that effect single nodes do not occur. In such environments nodes are equally fast and the overall system provides predictable response times.

Consistent performance summary

The unmanaged bare metal cloud gets a slightly worse grade compared to on-premise on hosting because although the computations are carried out on “bare metal” it still utilizes a heavily shared network and quite often shared storage as well.