Extensibility as a Critical Metric for Database Performance

When people evaluate database performance, they most likely refer to the query performance—the faster, the better. This is an important metric. The less time an end user has to wait for the result of his query, the higher the acceptance level and satisfaction. However, this criterion is often the only one, resulting in complicated implementations and high operational efforts.

Many of Exasol’s unique attributes contribute directly to superior query performance and lower operation costs, including MPP architecture, in-memory processing, and automatic index management. But this says nothing about the ability of a database to adapt to the customer’s need for additional functionality, which does not come out of the box.

In other words, can the database grow with my future requirements, and if so, how is this achieved? The key to extensibility in Exasol is the so-called User Defined Function (UDF) or a database script. While this is not unique to a particular database vendor, implementing User Defined Functions differs widely. All the different kinds of implementations come with advantages but also with disadvantages.

The Easy Way with Exasol

At Exasol, we have natively integrated the Lua (https://www.lua.org) script language into the database, which speeds up transformation tasks. Additionally, Lua allows to code user-defined functions, which are extremely fast but, unfortunately, at the expense of functionality. While many tasks can be implemented with Lua, for tasks beyond its capabilities, Exasol offers a much more flexible script language environment – more on that later.

Scripting Environments/Languages

Some database vendors allow users to code user-defined functions in a scripting language, such as Python, using the runtime environment of the underlying database node. Here, you can add packages and libraries to the runtime environment, allowing you complete flexibility in coding specific user-defined functions.

One can think that he is fine now and all problems are solved. But what could happen if you install many additional packages in your runtime environment because you have many user-defined functions, or the few packages you install have specific requirements that enforce the installation of additional packages? You might guess it already: you run into problems with version dependencies and, therefore, with incompatible package versions or packages that harm the functionality of other packages.

In other words, the more base packages you install, the higher the likelihood of a hefty nightmare. You can fight this possible nightmare with virtual environments in the runtime environments. Still, you need to be able to activate or deactivate the virtual environments from the database if a database is supporting this. Supporting more than one language environment makes it even more complex. Luckily, Exasol has anticipated this level of complexity and provides an approach to support this degree of customization.

Scripting Environments the Smart Way

The ultimate solution is a containerized approach, where you can deploy multiple language containers, each for specific tasks or customized functionality. Due to possibly competing base packages and versions, you install only the required packages without compromises.

Enabling a language environment is as easy as executing an „ALTER SESSION“ or „ALTER SYSTEM“ SQL command. The containers are now on standby but do not consume any resources. When calling a user-defined function or database script, the container is activated, the function is executed, and the container will be deactivated again, freeing up the resources. This is precisely the approach we follow at Exasol. And because of our MPP architecture, those user-defined functions can be executed in parallel efficiently. The language containers will be distributed to the individual nodes automatically. As an extra benefit, the runtime environments do not interfere with the runtime environments of the underlying database nodes or host OS. This adds a layer of security in case of a faulty runtime environment in the container or on the host. By standard, Exasol currently supports three languages for containerized runtime environments: Python, Java, and R.

Let me show you how to create a user-defined function in Python that fulfills your needs for a specific use case.

In our example, we want to call an externally hosted Large Language Model (LLM) and interact with data from the database. The external LLM is hosted on the LM-Studio (https://lmstudio.ai) application, which provides server functionality and can be contacted over the OpenAI API. The default script language container from Exasol does not contain the OpenAI package for Python. We need to create a new container which we can use. We have two options for creating a customized script language container. The first option is to download the base environment, customize, export, and upload the new container using the command line interface. The second option, which I am using in this example, is the usage of the Exasol AI-Lab.

It comes for free, and its primary use is to support data scientists with AI or ML development tasks and models, finally being deployed on the database itself. I am using the AI-Lab inside a Docker environment, ensuring the Docker socket from the host operating system is mounted into the Docker AI-Lab container.

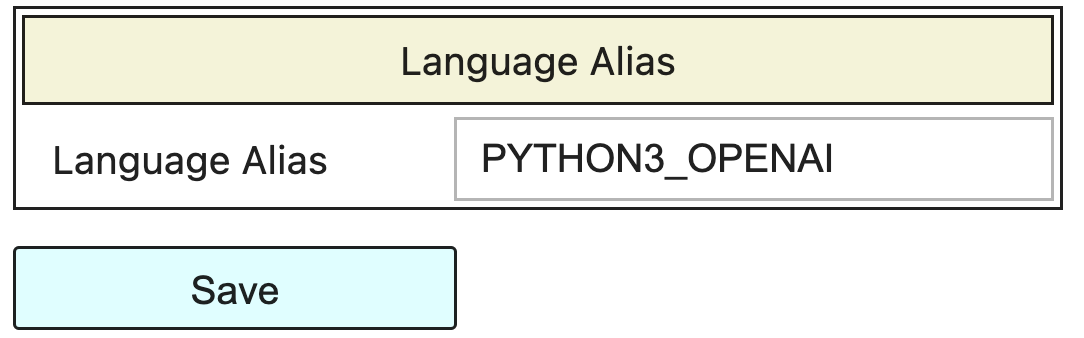

The first step is configuring the script language container repository, which clones the repository from Exasol’s Github site into the AI-Lab by executing several steps in a Jupyter Notebook environment. To distinguish different script language containers, we have to provide a unique name for our new Python environment; in our case:

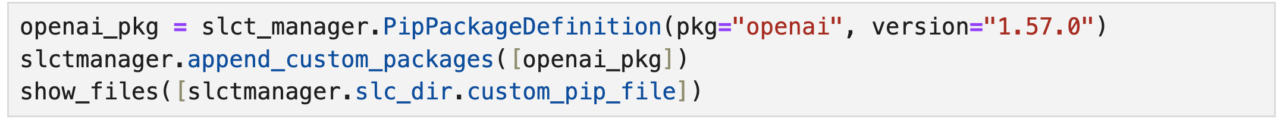

where you provide a name of choice for the Language Alias, which will be used to create and execute our new user-defined function; secondly, we configure the packages with their required versions, in addition to the default packages included by Exasol:

Finally, we build the script language container with the command:

and upload the new script language container to the database with:

Building the container takes a moment, as Docker is creating an entirely new environment from scratch – so be patient. The final step is to make the Exasol database aware of the new runtime environment. This can be done by either an ALTER SYSTEM or ALTER SESSION statement. The parameter to this statement will be displayed after the successful upload:

ALTER SESSION SET SCRIPT_LANGUAGES='PYTHON3_OPENAI=localzmq+protobuf:///bfsailab/exasol_dib/container/template-Exasol-all-python-3.10-release-PYTHON3_OPENAI?lang=python#buckets/bfsdefault/bfsailab/container/template-Exasol-all-python-3.10-release-PYTHON3_OPENAI/exaudf/exaudfclient_py3';If you need to look it up later again, you can use the following statement:

The first permanently enables one or more containers. In comparison, the latter only activates one or more containers for the current session, so remember to activate the container for your session. Once the new container has been uploaded to the Exasol database, AI-Lab issues the parameter of the ALTER [SESSION | SYSTEM] statement. We are good to go.

It is safe to say AI-Lab is a „Swiss Army Knife“ for AI/ML projects in combination with the Exasol database.

Now, let’s start with the implementation of our user-defined function for the inference of the external LLM:

CREATE OR REPLACE PYTHON3_OPENAI SCALAR

"EXTERNAL_LLM"."OPENAI_EXT_LLM"(

base_url VARCHAR(128),

api_key VARCHAR(128),

llm_model VARCHAR(128),

context VARCHAR(128),

question VARCHAR(2000000)

) EMITS (

answer VARCHAR(2000000)

) AS

from openai import OpenAI

def run(ctx):

client = OpenAI(base_url = ctx.base_url, api_key = ctx.api_key)

response = client.chat.completions.create(

model = ctx.llm_model

messages = [

{ "role" : "system", "content" : ctx.context },

{ "role" : "user", "content" : ctx.question }

],

temperature = 0.1

)

answer = response.choices[0].message.content

ctx.emit(answer)You can create the script with your favorite database tool or directly from the Jupyter environment. The user-defined function requires a URL to the LLM server, an API key, the model itself, the context, and the data it should operate on. It sends the request for processing to the externally hosted LLM, here by the LM-Studio application, and waits for the result. Invoking the user-defined functions is done by a SELECT statement:

SELECT

ID AS ID,

SUBSTR(TEXT, 0, 300) AS SHORT_TEXT,

EXTERNAL_LLM.OPENAI_EXT_LLM(

'{{BASE_URL}}', '{{API_KEY}}', '{{CONTEXT}}',

'{{LLM_MODEL}}', TEXT

) AS LLM_RESPONSE

FROM

EXTERNAL_LLM.NEWS

ORDER BY

IDIn our case, the API key can be an arbitrary string; LM Studio does not require a real API key, but we must provide one for the API call. The code should be self-explanatory. We must be cautious about the context, as it depends on the used LLM. Some LLMS are more general-use models, while others are trained for unique usage. An example context could be:

„Analyze the sentiment and answer with one word only.“

or

„Summarize the text with a maximum of 20 words.“

Conclusion

As you can see, a script language container can extend the database’s functionality in a wide range. At the same time, it ensures that neither other use cases with script language containers nor the underlying host operating system will be harmed. If a script runtime environment on the host is compromised, the databases’ script language container remains unaffected until they are separately infiltrated—a noteworthy remark concerning data security and integrity. On the other hand, packages for the user-defined functions cannot interfere with the script language environment of the underlying OS, risking potential misbehavior of the OS and, therefore, risking the entire database operations.

In our example, you might ask why the LLM is not directly executed on the database server. Inferencing, an LLM on a pure CPU environment with many passes of a Neural Network, can consume some time, and only a few databases are equipped with GPUs or Neural Processors. A shared computing resource with GPUs can speed things up significantly, even with the need for external function calls.

While different projects use different frameworks for tasks that standardized frameworks could achieve, there might be other important reasons to allow multiple frameworks – a perfect use case for individual script language containers.

Happy Exasoling!