Today I want to talk to you about integration testing, a topic that while it’s very important, is often neglected because it’s so damn tedious. This blog also lays the foundation for the next one that will explain how to make your life easier with Exasol’s Testcontainers.

But even if you’ve never heard of Testcontainers — or Exasol for that matter — this article discusses topics that are universally relevant in the software testing. So let’s dive in.

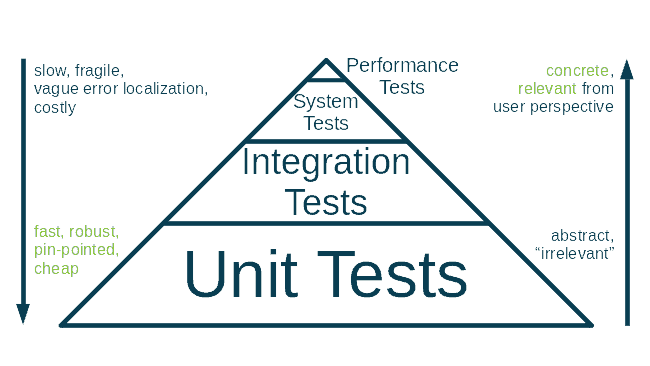

The diet pyramid of testing

When talking about testing I like to start with what I call the ‘diet pyramid of testing’. As you may already know, the regular diet pyramid is a recommendation created by nutrition experts that tells us what kind of foods are healthy for us, and in what amounts.

It’s a simple graphical representation of the fact that whole grains, vegetables and fruit are a lot healthier than cheesecake, pork pies and chocolate bars. It doesn’t say “never eat cheesecake”. The bottom line is, you need to concentrate on what your body needs – and if you largely follow this rule of thumb, something sugary or loaded with fat from time to time is okay. Just don’t overdo it.

Coming back to testing, unit tests are your bread, cereals, fruit and vegetables. Integration tests are milk products, egg and meat (or maybe almond milk, tofu and jackfruit if you’re a vegetarian or vegan). System tests are chocolate cookies, and performance tests are the triple-fat cream pies.

If you are missing any layers in the pyramid above, let me point out that you as a developer decide on a per-project basis how many levels of test you need. And how many you can handle. Following standards blindly won’t get you very far. If your job is to write embedded code for a timer module that switches the light off in a staircase, your test strategy will look a lot different from implementing a distributed image recognition system for medical purposes.

Error localization, effort and fragility

The simple reason why you should have a solid base of unit tests is that with widening scope of a test, the effort goes up disproportionately, while the ability to pin-point the source of an error decreases.

What’s worse is that higher level tests are also more fragile. Imagine writing a test for a GUI application that remotely controls the GUI by clicking buttons, filling form fields and reading the contents of dialogs. Move one menu button to another sub-menu and all tests that used the old button break — at the same time. Now compare this with how often UIs are typically tweaked and you get an idea of why having more than the absolute necessary amount of GUI tests is a problem.

Another aspect to consider is that when running a unit test it’s much easier to find the source of an error than with an integration test. The more code a test covers the harder it gets to say which part of that covered code contains the mistake.

Rule of thumb: if you can, test a function on a level as low as possible. Only choose a higher test level if a low-level test is insufficient to prove that the function is implemented correctly.

Abstraction and relevance

Unfortunately, low level tests have their downsides too. As always there is no free lunch – to keep this analogy cooking a little longer. The biggest problem with unit tests is that from the perspective of end users they’re irrelevant. There, I said it. It hurts to admit: programmers care about unit tests — end users don’t.

If you ever saw the V-Model, you might have noticed that there are dedicated tests intended to ensure the end-user requirements are met. They sit on the very top of the V.

Integration tests can’t be avoided

That being said, there are more than enough cases, where higher level tests like integration tests simply can’t be avoided. Your software doesn’t live in a vacuum and as soon as you’re handling external resources or interfaces, integration tests are mandatory. To see how quickly you end up in the realm of integration tests consider this:

- Are you modifying files?

- Do you access a database?

- Are you using services via a network?

If you answer any of those questions with a yes, congratulations, you just won an integration test.

Why high-level tests are so much effort

From my experience raising a test to higher levels increases effort exponentially. Even a simple integration test between a software that you wrote and the database that it uses to store its data requires…

- starting said database,

- creating a connection,

- authentication,

- preparing test data,

- running your test,

- checking the results

- and of course cleaning up after the tests.

Now imagine an end-to-end system test with six services involved. Your effort just went through the roof because now you need the infrastructure to set up those six services, connect them, bring them into a deterministic start state, run your test, collect the test logs and – well, you get the picture, I could go on for an hour with this.

What’s worse is that in that end-to-end scenario the setup is so time-consuming and expensive that you probably have to share it with other developers. And they probably need slightly different configurations for their tests. If you as a team are not very careful, you break each others tests.

Resist the temptation to test manually

We’re now approaching what I consider the core of the integration test problem. Since they’re so hard to set up, the temptation is huge to create the setup by hand, connect to it and run the tests on that setup. Some consider this semi-automatic testing.

My advice: please don’t!

This has all kinds of negative side effects:

- No complete reproducibility

- Lots of effort to restore the setup after a disastrous failure

- The dreaded “Works on my machine”™ issue

- It breaks the concept of Continuous Integration

- It isn’t available for contributors

All in all, this is a situation you want to avoid at all costs. And if you’re having any doubts, we all know that “manual testing” is just a fancy way of saying “untested”.

When you do automatic testing, do it all the way. That means you have to setup your complete test environment via code, including all dependencies. Only then can a CI server or an external contributor run those tests too.

Virtualization to the rescue

Observant readers probably already guessed it: virtualization allows you to fully automate integration tests. Sadly, it also increases the complexity of your test setup — again. That is why you should carefully select, which virtualization option and framework around it are suited to make your life as an integration tester easier.

Docker, Exasol and Testcontainers

For integration tests that involve an Exasol analytics database you can benefit from the fact that we provide Docker images of Exasol. That means you need a Docker installation on your development machine and CI server. Now combine that with the OpenSource Framework called Testcontainers (created to reduce the boilerplate code required to setup Docker-based integration test environments).

Enter ‘Exasol Testcontainers’. In a nutshell Exasol Testcontainers provides a plugin to the Testcontainers framework that allows you to set up and run an Exasol instance with no more than a handful of lines of code. That way you can spend your time on implementing the actual test logic instead of the test setup.

In the next article we’ll discuss concrete examples of running integration tests against Exasol using the Exasol Testcontainer.

Conclusion

Testing is hard. And it gets harder the closer the tests get to the domain of the end-user. To overcome this, make sure your test strategy has a solid foundation of unit tests and add higher level tests only where unit tests are insufficient. You can’t avoid integration tests, but you can make your life easier with virtualization and an integration test framework. And to end on my diet plan analogy, I think you’ll agree this method makes integrations tests a heck of a lot easier to swallow.